You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

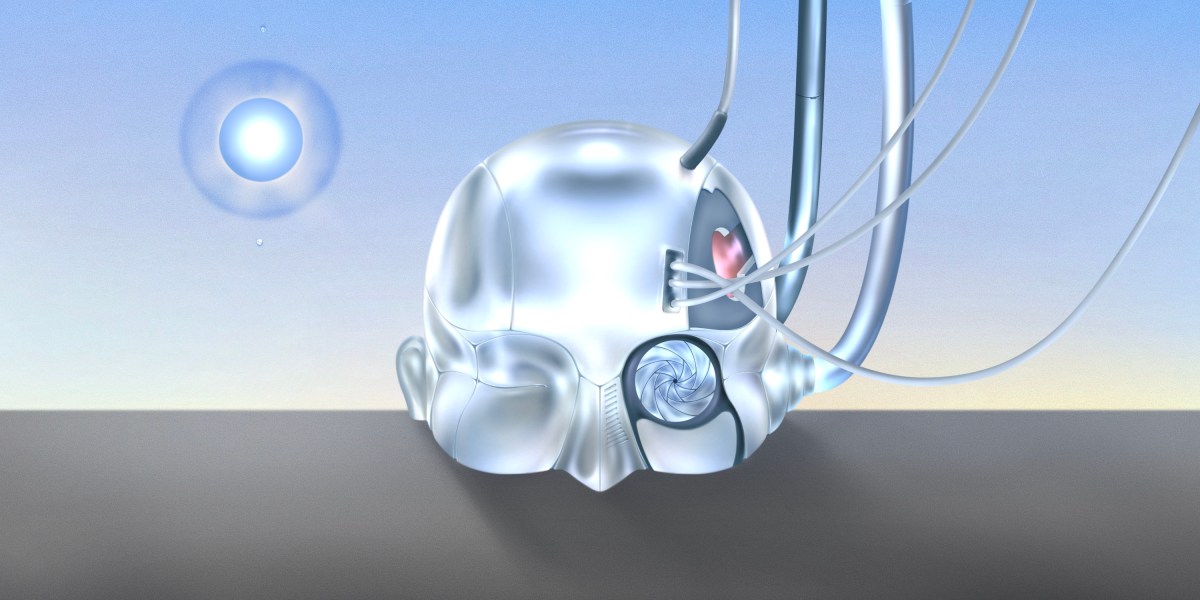

Stable Diffusion - fMRI mind reading

- Thread starter pmbug

- Start date

Welcome to the Precious Metals Bug Forums

Welcome to the PMBug forums - a watering hole for folks interested in gold, silver, precious metals, sound money, investing, market and economic news, central bank monetary policies, politics and more.

Why not register an account and join the discussions? When you register an account and log in, you may enjoy additional benefits including no Google ads, market data/charts, access to trade/barter with the community and much more. Registering an account is free - you have nothing to lose!

AI tech taking this to the next level...

www.theguardian.com

www.theguardian.com

See also:

www.nature.com

www.nature.com

An AI-based decoder that can translate brain activity into a continuous stream of text has been developed, in a breakthrough that allows a person’s thoughts to be read non-invasively for the first time.

The decoder could reconstruct speech with uncanny accuracy while people listened to a story – or even silently imagined one – using only fMRI scan data. Previous language decoding systems have required surgical implants, and the latest advance raises the prospect of new ways to restore speech in patients struggling to communicate due to a stroke or motor neurone disease.

Dr Alexander Huth, a neuroscientist who led the work at the University of Texas at Austin, said: “We were kind of shocked that it works as well as it does. I’ve been working on this for 15 years … so it was shocking and exciting when it finally did work.”

The achievement overcomes a fundamental limitation of fMRI which is that while the technique can map brain activity to a specific location with incredibly high resolution, there is an inherent time lag, which makes tracking activity in real-time impossible.

The lag exists because fMRI scans measure the blood flow response to brain activity, which peaks and returns to baseline over about 10 seconds, meaning even the most powerful scanner cannot improve on this. “It’s this noisy, sluggish proxy for neural activity,” said Huth.

This hard limit has hampered the ability to interpret brain activity in response to natural speech because it gives a “mishmash of information” spread over a few seconds.

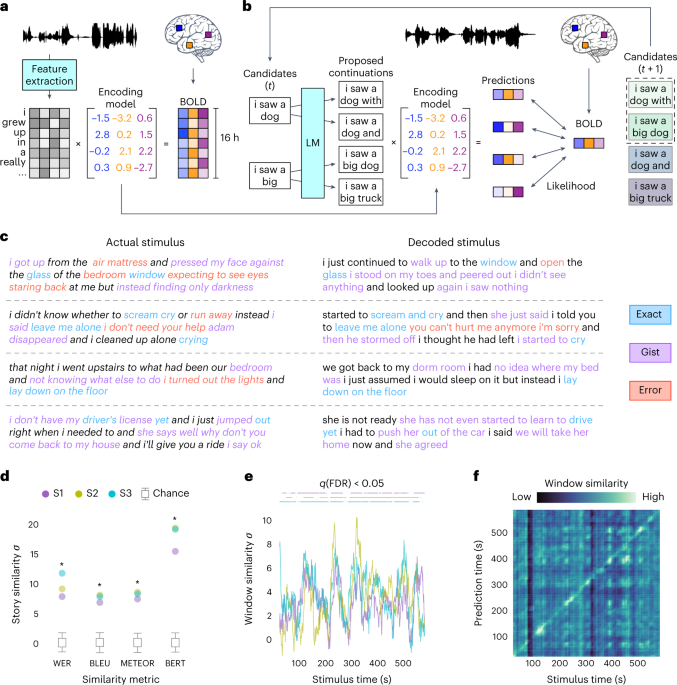

However, the advent of large language models – the kind of AI underpinning OpenAI’s ChatGPT – provided a new way in. These models are able to represent, in numbers, the semantic meaning of speech, allowing the scientists to look at which patterns of neuronal activity corresponded to strings of words with a particular meaning rather than attempting to read out activity word by word.

The learning process was intensive: three volunteers were required to lie in a scanner for 16 hours each, listening to podcasts. The decoder was trained to match brain activity to meaning using a large language model, GPT-1, a precursor to ChatGPT.

Later, the same participants were scanned listening to a new story or imagining telling a story and the decoder was used to generate text from brain activity alone. About half the time, the text closely – and sometimes precisely – matched the intended meanings of the original words.

...

AI makes non-invasive mind-reading possible by turning thoughts into text

Advance raises prospect of new ways to restore speech in those struggling to communicate due to stroke or motor neurone disease

See also:

Semantic reconstruction of continuous language from non-invasive brain recordings - Nature Neuroscience

Tang et al. show that continuous language can be decoded from functional MRI recordings to recover the meaning of perceived and imagined speech stimuli and silent videos and that this language decoding requires subject cooperation.

AI is learning how to create itself

Humans have struggled to make truly intelligent machines. Maybe we need to let them get on with it themselves.